Branded Content

Your AI Strategy Has a Latency Problem

Written by: Nick Albertini | Global Field CTO at Tealium

Updated 4:03 PM UTC, February 16, 2026

We are living through a massive explosion of intelligence. Every week, it seems, there is a new “State of the Art” model released. First, it was GPT-4, 5, 5.2, then Gemini 3 or DeepMind, then Claude 4.5, Grok 4.1, and so on, not to mention open-source models and others. The sheer velocity of model evolution is dizzying.

For CDOs, CTOs, and CIOs, this creates an enormous pressure to “keep up.” But here is the reality check: The model is no longer your competitive advantage.

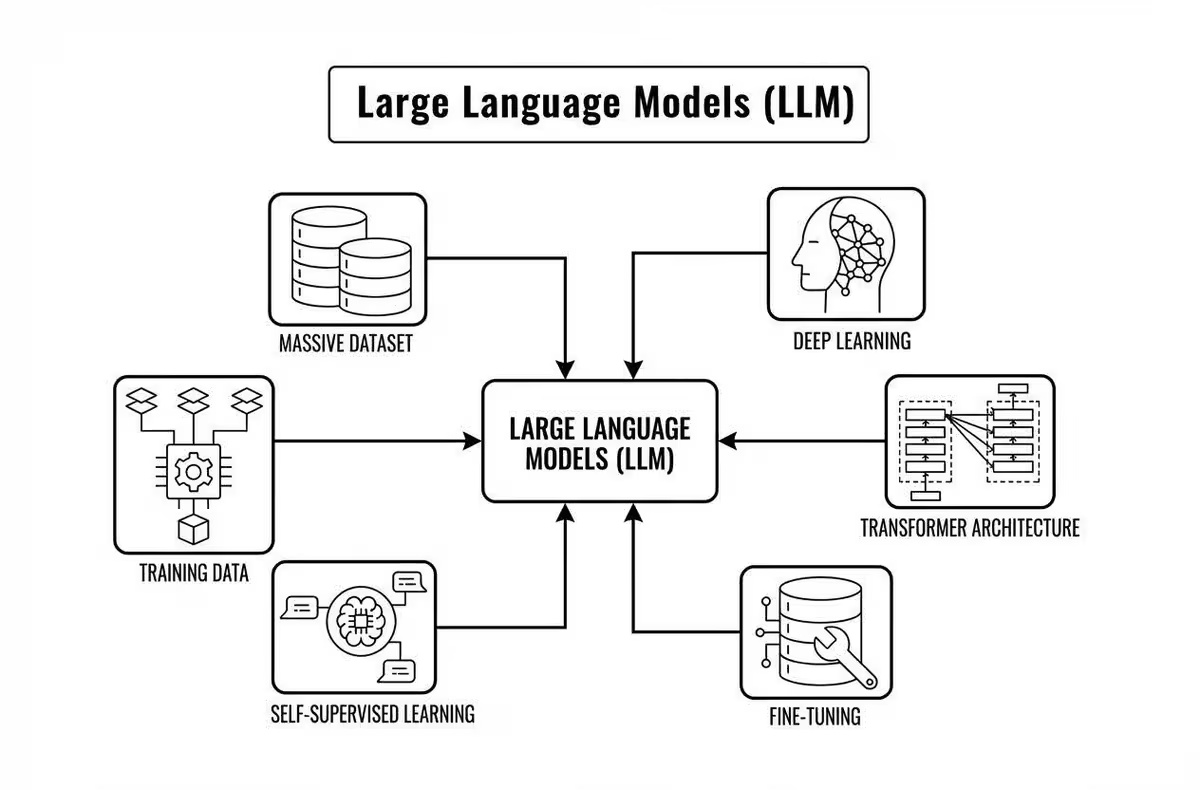

Intelligence has been democratized (finally!). Access to the world’s most powerful LLMs is now a commodity API call. Your competitor has the exact same access to Gemini or OpenAI as you do. If everyone is driving a Bugatti, the car itself ceases to be the differentiator.

So, if the engine is a commodity, what determines who wins the race? The Fuel. To stay with this metaphor momentarily, the underperformer in the race is putting in “Regular Unleaded” and the winner is putting in “High-Octane Race Fuel.” The underperformer is driving 200mph with a map from last week (batch data), and the winner is using a real-time GPS (Real-Time streaming). I could continue…

Clearly, the fuel is your data.

Your competitive advantage has shifted entirely from the intelligence you buy to the quality, speed, context, and safety of the data you feed it. Intelligence has been democratized, meaning it has been made available to everyone with an internet connection and a good sense of how to prompt an AI model.

In 2023, the question was “Which model should we use?” In 2026, the answer is “All of them.” Models will become specialized utilities — one for code, one for creative writing, and one for data analysis.

Building a “better model” in-house is a diminishing return for 99% of enterprises. You cannot out-spend hyperscalers like Google or Microsoft on compute. The new battleground is AI-readiness.

AI-readiness isn’t about having a data lake. It is about the ability to feed these hungry, commoditized models with high-fidelity, compliant, and contextual data in real-time. The organizations that win won’t be the ones with the smartest algorithms; they will be the ones with the smartest pipelines.

The “Modern Data Stack” has become a significant bottleneck in the race and it is failing AI

The uncomfortable truth is that the “Modern Data Stack” (ETL ➜ Warehouse ➜ BI) was built for human intelligence, not AI. It was designed to produce dashboards for executives to look at on Monday morning. It works on Batch Processing. It tolerates latency.

But AI tolerates neither. I’ve said this many times before. AI succeeds when it is run on clean, consented, contextually-rich, real-time data. If your data has to go through ETL, sit in a data warehouse, be indexed, and then be “Reverse ETL’d” back out to a personalization engine, that data is hours (or days) old.

The result is that you are feeding your AI old context. If a user buys a pair of shoes at 9:00 AM, but the warehouse doesn’t sync until midnight, your AI will spend the entire day trying to sell them the shoes they just bought. That isn’t intelligence; it’s hallucination based on latency.

Then, there is the risk of feeding raw data into models. It is a veritable governance minefield if consent is not clearly captured and passed through the entire data chain.

Once an LLM “learns” a piece of data, it is incredibly difficult to make it “unlearn” it. If you accidentally train a model on PII (Personally Identifiable Information) or data from a user who opted out of tracking, you have poisoned the well. You face the nightmare scenario of having to delete the entire model to comply with a “Right to be Forgotten” request.

Lastly, there is a significant cost of complexity when you are moving massive amounts of raw data into storage just to query it back out for inference. You are paying for storage, paying for compute to move it, and paying for the latency in lost revenue.

To fix this, we need to understand the difference between teaching an AI and using an AI.

Your Data Warehouse is excellent for training (teaching the model using historical data). But it is terrible for inference (the moment the model actually “thinks” and makes a prediction).

When a customer is on your site, you cannot afford to send their data to a warehouse, wait for a batch process, and then ask the model for a prediction. That is too slow.

The architectural pivot required is a move toward Direct-to-Model data streams. This is where platforms that are built with real-time data in mind at their core bridge the gap. They allow you to create a real-time, consented data stream that bypasses the “storage” layer (the Warehouse) and goes straight to the “intelligence” layer (the Model).

This stream has four non-negotiable characteristics:

1. Context: Raw clickstreams are weak fuel

An AI agent doesn’t just need to know “User clicked X.” It needs the full story. Using an Open Schema, these platforms enrich the stream in real-time. They pass a JSON object that carries the visitor’s history, their current intent (e.g., “high urgency”), consent, and their lifetime value. We hand the AI a full set of data with context, so it doesn’t have to guess and ultimately hallucinate trying to fill in the gaps.

2. Consent: Consented Governance cannot be an afterthought; it must be the gatekeeper

Consent needs to be enforced before the data hits the stream. If a user hasn’t opted in for AI processing, the data should never leave the Data Layer. This prevents the “unlearning” problem before it starts. Your model is safe because it never tasted the forbidden fruit, and you won’t have to go through the terrible burden of attempting to “untrain” a model. Keep the consent flag with the profile throughout the entire data journey.

3. Real-time: The stream happens in milliseconds

When a user adds an item to the cart, that signal is flashed to the AI model instantly. The AI can generate a “Next Best Action” (e.g., a discount code or a support chat) while the page is still loading.

4. Quality: Models hate noise

The taxonomy must be standardized across all channels (Web, App, Call Center, offline) so the model receives a consistent dialect, reducing the need for data cleaning.

In conclusion, the infrastructure is the refinery. The models will keep changing. Today it’s GPT-5.2; tomorrow it’s Gemini 3.0; next week it’s something we haven’t even heard of yet.

If you build your strategy around a specific model, you will be obsolete in six months or even less.

But if you build your strategy around the data pipeline — the infrastructure that captures, cleans, consents, and delivers context in real-time — you have built a long-term asset. Stop trying to out-build the model makers. You can’t. Start building the pipeline that makes their models work for you.

Your competitive advantage isn’t the engine. It’s the fuel.

About the author:

Nick Albertini is the Global Field CTO at Tealium, where he champions strategic innovation in customer data and modern marketing technology. With 18+ years of experience across all industry verticals, Nick is a respected voice on data architecture and ecosystem transformation.

His background includes leading extensive teams of architects and data scientists to deliver omnichannel personalization for clients like Uber, Dell, and M.D. Anderson Cancer Center. Albertini is passionate about helping brands create unique, personal customer experiences through robust data integration. He holds an MBA from The University of Texas at Dallas and a B.S. from Texas A&M University.